集群环境简介

系统:Centos6.9 x64

master 172.16.12.142

slave1 172.16.12.140

slave2 172.16.12.141

配置ssh互信:

1.三台机器上生成各自的key文件

[root@master ~]# ssh-keygen -t rsa

[root@slave1 ~]# ssh-keygen -t rsa

[root@slave2 ~]# ssh-keygen -t rsa

2.cp公钥到其他两台机器

#master端

[root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.140

[root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.141

#slave1

[root@slave1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.141

[root@slave1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.142

#slave2

[root@slave2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.140

[root@slave2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@172.16.12.142

配置Java环境

注:此处是用1.7.x版本,1.8也可以用;3台服务器都需要执行操作

1.master端执行

tar -zxvf jdk-7u67-linux-x64.tar.gz

mv jdk1.7.0_67 /usr/local/

#写入系统环境变量

[root@master src]# vim /etc/profile

export JAVA_HOME=/usr/local/jdk1.7.0_67

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:

#刷新环境变量

[root@master src]# source /etc/profile

#执行以下命令出现如下结果则java搭建成功

[root@master ~]# java -version

java version "1.7.0_67"

Java(TM) SE Runtime Environment (build 1.7.0_67-b01)

Java HotSpot(TM) 64-Bit Server VM (build 24.65-b04, mixed mode)

2.配置slave节点

scp -rp /usr/local/jdk1.7.0_67 slave1:/usr/local/jdk1.7.0_67

scp -rp /usr/local/jdk1.7.0_67 slave2:/usr/local/jdk1.7.0_67

vim /etc/profile #slave1,salve2相同操作

export JAVA_HOME=/usr/local/jdk1.7.0_67

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin

Hadoop下载

#Master端执行:

wget http://apache.melbourneitmirror.net/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

tar zxvf hadoop-2.6.5.tar.gz

Hadoop配置文件(7个)

1.配置文件修改

#Master端执行

cd hadoop-2.6.5/etc/hadoop

- hadoop-env.sh

# cd hadoop-2.6.5/etc/hadoop #配置文件存放路径

# vim hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.7.0_67- yarn-env.sh

# vim yarn-env.sh

export JAVA_HOME=/usr/local/jdk1.7.0_67- slaves

# vim slaves

slave1

slave2- core-site.xml

# vim core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.16.12.142:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/src/hadoop-2.6.5/tmp</value>

</property>

</configuration>

- hdfs-site.xml

# vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/src/hadoop-2.6.5/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/src/hadoop-2.6.5/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

- mapred-site.xml

# vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- yarn-site.xml

# vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

2.创建临时目录和文件目录

mkdir /usr/local/src/hadoop-2.6.5/tmp

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/name

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/data

3.配置hadoop环境变量

注:(Master,Slave1,Slave2都执行)

vim /etc/profile

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin

#刷新环境变量

source /etc/profile

4.拷贝hadoop安装包到slave节点

#Master端执行

scp -rp /usr/local/src/hadoop-2.6.5 root@slave1:/usr/local/src/hadoop-2.6.5

scp -rp /usr/local/src/hadoop-2.6.5 root@slave2:/usr/local/src/hadoop-2.6.5

启动和关闭集群

注:(Master端执行即可)

#初始化Namenode

[root@slave2 src]# hadoop namenode -format

#启动服务

[root@master sbin]# ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

18/04/13 11:13:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop-2.6.5/logs/hadoop-root-namenode-master.out

slave1: starting datanode, logging to /usr/local/src/hadoop-2.6.5/logs/hadoop-root-datanode-slave1.out

slave2: starting datanode, logging to /usr/local/src/hadoop-2.6.5/logs/hadoop-root-datanode-slave2.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /usr/local/src/hadoop-2.6.5/logs/hadoop-root-secondarynamenode-master.out

18/04/13 11:13:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop-2.6.5/logs/yarn-root-resourcemanager-master.out

slave1: starting nodemanager, logging to /usr/local/src/hadoop-2.6.5/logs/yarn-root-nodemanager-slave1.out

slave2: starting nodemanager, logging to /usr/local/src/hadoop-2.6.5/logs/yarn-root-nodemanager-slave2.out

警告信息处理:

18/04/13 11:13:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

解决方法:

编辑日志文件log4j.properties,可以参考此篇

[root@master hadoop]# vim log4j.properties #添加如下一行内容

log4j.logger.org.apache.hadoop.util.NativeCodeLoader=ERROR

#查看集群进程(jps)

[root@master sbin]# jps

1973 SecondaryNameNode

2119 ResourceManager

1797 NameNode

2377 Jps

[root@slave1 src]# jps

1763 Jps

1550 DataNode

1644 NodeManager

[root@slave2 src]# jps

1763 Jps

1550 DataNode

1644 NodeManager

#关闭集群

./sbin/hadoop stop-all.sh

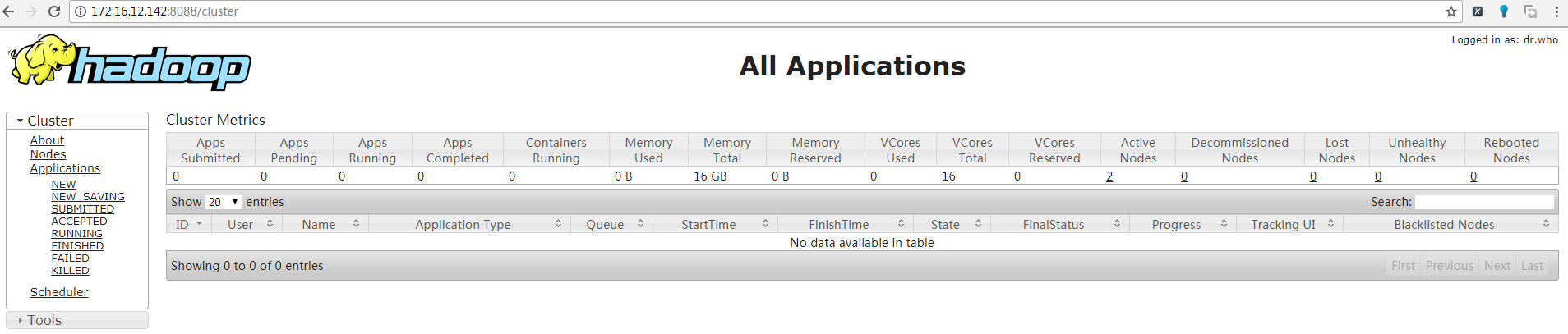

监控网页展示

浏览器访问:http://172.16.12.142:8088

本文由 Mr Gu 创作,采用 知识共享署名4.0 国际许可协议进行许可

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名

最后编辑时间为: Apr 16, 2018 at 03:50 am